Constraint-Driven Software Development with Autonomous Agents (feat. Alex Morris)

AI agents are transforming software development by automating code, testing, and deployment, shifting engineers from writing code to defining constraints, auditing systems, and providing judgement.

In this conversation, Alex Morris, Chief Tribe Officer at Tribecode, discusses the transformative impact of AI on software engineering, emphasizing the shift towards autonomous code generation and the evolving roles of engineers and product managers. He highlights the importance of adapting to new tools, the necessity of upskilling, and the changing dynamics of client interactions. The discussion also touches on job security for engineers in an AI-driven world and the potential for increased productivity and efficiency in software development processes.

Krish and Alex delve into various themes surrounding the future of work, the evolution of software development skills, the impact of AI on job markets, and the role of education in the modern workforce. They discuss the changing landscape of tech innovation globally, the implications of outsourcing, and the skepticism surrounding AI and data centers. The conversation also touches on market trends, economic concerns, and personal insights into the future aspirations of the speakers.

Introduction

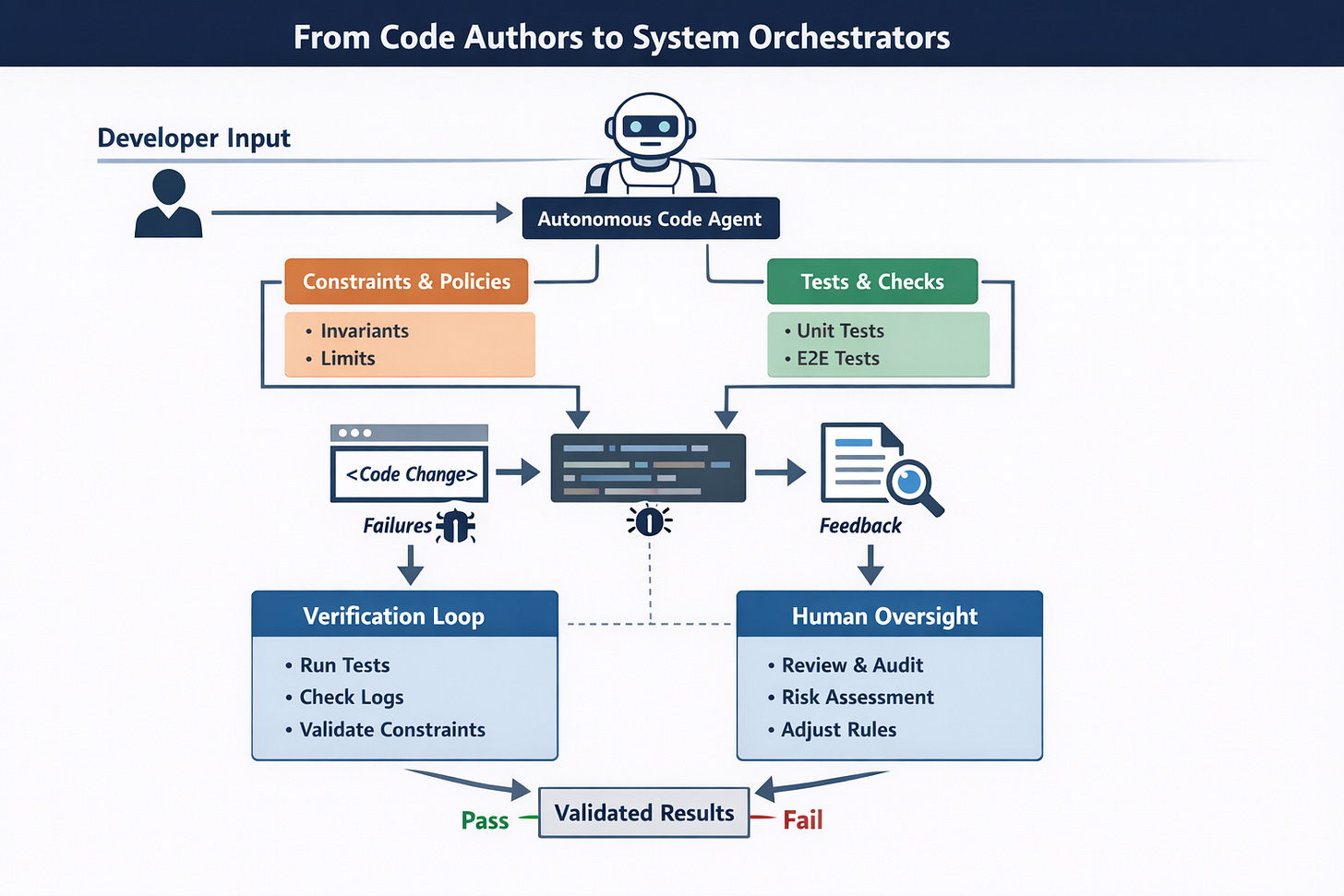

Software development is undergoing a structural shift. The change is not that code is being generated instead of written—that transition already happened. The deeper change is that software systems are increasingly built, modified, and maintained by autonomous agents operating inside constraints defined by humans. Developers are moving from being primary code authors to becoming system designers, auditors, and orchestrators.

Podcast

From Code Authoring to System Steering: Software Development in the Age of Autonomous Agents — on Apple and Spotify.

Autonomous Code Is Not “Better Autocomplete”

Modern code agents are not simply faster IDE assistants. They operate across repositories, execute tests, inspect logs, browse documentation, and iteratively refine solutions. The important distinction is that they act, not just suggest.

An autonomous coding agent typically:

Reads and reasons across multiple files and services

Generates and modifies code in batches, not lines

Executes tests or scripts to validate assumptions

Revises its output based on failures or telemetry

Documents its own decisions post-hoc

This changes the unit of work. Developers are no longer optimizing keystrokes; they are optimizing feedback loops.

The New Center of Gravity: Constraints and Verification

As agents become capable of producing large volumes of code quickly, the bottleneck moves upstream. The hardest problems are no longer “how do I implement this?” but:

What must never happen in this system?

How do we detect silent failures?

What invariants must always hold?

What signals prove correctness beyond compilation?

In practice, this means constraints matter more than specifications. A list of desired features is weak input for an agent. A set of invariants, failure conditions, security boundaries, and performance budgets is strong input.

Well-designed agent workflows typically begin with:

Explicit system constraints

Clear definitions of success and failure

Executable tests or checks

Observability hooks from day one

If these are missing, agents will still generate code—but it will drift, hallucinate integrations, or pass superficial checks while violating core assumptions.

Testing Becomes the Primary Interface

In traditional workflows, tests validate human-written code. In agent-driven workflows, tests guide code generation itself.

Agents perform best when:

Tests exist before or alongside code generation

End-to-end checks exist, not just unit tests

UI and API behavior can be verified automatically

Logs and metrics are treated as first-class outputs

Tools like browser automation, API contract tests, and property-based testing are no longer “nice to have.” They are the control surfaces that keep autonomous systems aligned.

Without strong test scaffolding, agents behave like fast interns with infinite confidence and no intuition for danger.

Human Value Shifts to Judgment and Structure

What developers uniquely contribute is not syntax knowledge. Agents are already language-agnostic at a practical level. Human value concentrates in areas where judgment, context, and trade-offs dominate.

This includes:

Decomposing ambiguous problems into solvable components

Identifying hidden risks and edge cases

Choosing architectural boundaries that survive scale

Deciding what not to build

Auditing agent decisions and correcting course

Developers who thrive are those who can look at an agent’s output and immediately ask: what assumption is this making that might be wrong?

That ability comes from experience, not tooling.

Git Discipline Becomes a Force Multiplier

When multiple agents generate parallel changes, version control hygiene becomes critical infrastructure.

High-functioning teams emphasize:

Small, isolated changesets

Aggressive branching and rebasing discipline

Frequent rollbacks without fear

Clear commit intent and history

Machine-generated documentation attached to changes

Poor Git hygiene no longer costs hours—it can cost days of disentangling automated changes. In an agent-driven environment, organizational entropy compounds faster.

Documentation Is No Longer Optional

Because agents can generate documentation as easily as code, failing to document is no longer a time trade-off—it is a coordination failure.

Modern workflows treat documentation as:

A synchronization mechanism for humans and agents

A memory layer for future automation

A constraint surface for future changes

If a system is not documented, agents will invent explanations. Humans will misinterpret intent. Both outcomes are expensive.

Team Size Shrinks, Responsibility Expands

One of the paradoxes of autonomous development is that fewer people can now do more—but the remaining people carry more responsibility.

Teams shrink not because engineering is less important, but because:

Execution is cheaper

Validation is the new bottleneck

Mistakes propagate faster

Oversight matters more than output

This favors engineers who can operate across domains: backend, frontend, infra, testing, and product reasoning. Narrow specialization without system awareness becomes fragile.

The Future Developer Is a Systems Operator

The emerging role looks less like a traditional coder and more like a systems operator for software intelligence.

That role involves:

Designing constraint-driven workflows

Supervising fleets of agents

Auditing decisions and outcomes

Rapidly iterating on system structure

Closing loops quickly and moving on

The metric of success is no longer lines of code or features shipped. It is cases closed per unit time without regressions.

(ChatGPT-Generated) Snippets for Golang Developers

Developer Section: Constraint-Driven Agent Workflow in Go (Copy/Paste Snippets)

If you’re building with Go, the fastest way to make agents productive and safe is to treat tests + constraints + CI as the control system. Agents can generate code quickly, but without hard verification loops they’ll drift. Strong test harnesses and auditable changes are the “steering wheel.”

1) Put constraints in the repo (agents must read this first)

# docs/constraints.md

## Non-negotiables

- No breaking API changes without version bump + migration notes.

- All handlers must enforce: authn/authz + request validation.

- Every write endpoint must be idempotent (retries happen).

- P95 latency budget: /v1/search <= 150ms staging, 250ms prod.

- No goroutine leaks in request path.

## Definition of done

- Unit tests updated/added

- At least one integration or contract test updated/added

- Structured logs on error paths

- Docs updated (architecture/runbook)In your agent/system prompt (Cursor/Claude Code/etc.):

Read docs/constraints.md before edits.

Do not mark work complete unless `make test` and `make lint` pass.

If uncertain, add a test to lock in the assumption.2) A Go HTTP handler with explicit dependencies (testable + agent-friendly)

// internal/http/handlers/search.go

package handlers

import (

"encoding/json"

"net/http"

"time"

)

type SearchService interface {

Search(r *http.Request, q string) ([]any, error)

}

type Logger interface {

Error(msg string, kv ...any)

Info(msg string, kv ...any)

}

type SearchHandler struct {

Svc SearchService

Log Logger

}

func (h SearchHandler) ServeHTTP(w http.ResponseWriter, r *http.Request) {

start := time.Now()

q := r.URL.Query().Get("q")

if q == "" {

http.Error(w, "missing q", http.StatusBadRequest)

return

}

results, err := h.Svc.Search(r, q)

if err != nil {

h.Log.Error("search_failed", "err", err, "q", q)

http.Error(w, "search failed", http.StatusInternalServerError)

return

}

w.Header().Set("Content-Type", "application/json")

_ = json.NewEncoder(w).Encode(map[string]any{

"q": q,

"results": results,

"tookMs": time.Since(start).Milliseconds(),

})

}This style (interfaces + constructor-friendly structs) is ideal when agents are making changes because it’s easy to validate behavior without spinning the whole app.

3) Unit tests with httptest (fast proof loop)

// internal/http/handlers/search_test.go

package handlers

import (

"errors"

"net/http"

"net/http/httptest"

"testing"

)

type fakeSvc struct {

res []any

err error

}

func (f fakeSvc) Search(r *http.Request, q string) ([]any, error) {

if f.err != nil {

return nil, f.err

}

return f.res, nil

}

type nopLog struct{}

func (nopLog) Error(string, ...any) {}

func (nopLog) Info(string, ...any) {}

func TestSearchHandler_MissingQuery(t *testing.T) {

h := SearchHandler{Svc: fakeSvc{}, Log: nopLog{}}

req := httptest.NewRequest(http.MethodGet, "/v1/search", nil)

rr := httptest.NewRecorder()

h.ServeHTTP(rr, req)

if rr.Code != http.StatusBadRequest {

t.Fatalf("expected 400, got %d", rr.Code)

}

}

func TestSearchHandler_ServiceError(t *testing.T) {

h := SearchHandler{Svc: fakeSvc{err: errors.New("boom")}, Log: nopLog{}}

req := httptest.NewRequest(http.MethodGet, "/v1/search?q=go", nil)

rr := httptest.NewRecorder()

h.ServeHTTP(rr, req)

if rr.Code != http.StatusInternalServerError {

t.Fatalf("expected 500, got %d", rr.Code)

}

}

func TestSearchHandler_Success(t *testing.T) {

h := SearchHandler{

Svc: fakeSvc{res: []any{"a", "b"}},

Log: nopLog{},

}

req := httptest.NewRequest(http.MethodGet, "/v1/search?q=go", nil)

rr := httptest.NewRecorder()

h.ServeHTTP(rr, req)

if rr.Code != http.StatusOK {

t.Fatalf("expected 200, got %d", rr.Code)

}

if ct := rr.Header().Get("Content-Type"); ct != "application/json" {

t.Fatalf("expected json, got %q", ct)

}

}4) Contract tests that prevent “silent breaking changes”

Lock your response schema by asserting fields and types. This catches agent-generated “creative” refactors.

// tests/contracts/search_contract_test.go

package contracts

import (

"encoding/json"

"net/http"

"net/http/httptest"

"testing"

"yourapp/internal/http/handlers"

)

type fakeSvc struct{}

func (fakeSvc) Search(r *http.Request, q string) ([]any, error) { return []any{}, nil }

type nopLog struct{}

func (nopLog) Error(string, ...any) {}

func (nopLog) Info(string, ...any) {}

func TestSearchResponseContract(t *testing.T) {

h := handlers.SearchHandler{Svc: fakeSvc{}, Log: nopLog{}}

req := httptest.NewRequest(http.MethodGet, "/v1/search?q=go", nil)

rr := httptest.NewRecorder()

h.ServeHTTP(rr, req)

if rr.Code != 200 {

t.Fatalf("expected 200 got %d", rr.Code)

}

var body map[string]any

if err := json.Unmarshal(rr.Body.Bytes(), &body); err != nil {

t.Fatalf("invalid json: %v", err)

}

// Required keys (expand as needed)

for _, k := range []string{"q", "results", "tookMs"} {

if _, ok := body[k]; !ok {

t.Fatalf("missing required key %q", k)

}

}

// Type checks

if _, ok := body["q"].(string); !ok {

t.Fatalf("q must be string")

}

if _, ok := body["results"].([]any); !ok {

t.Fatalf("results must be array")

}

if _, ok := body["tookMs"].(float64); !ok { // JSON numbers -> float64 in map decode

t.Fatalf("tookMs must be number")

}

}5) “Agent-proof” lint + test loop via

Makefile

.PHONY: test lint fmt ci

test:

go test ./... -race -count=1

lint:

golangci-lint run

fmt:

gofmt -w .

ci: fmt lint testDevelopers run:

make ciAgents run the same command. If it fails, they don’t “argue”—they iterate.

6) Minimal GitHub Actions CI for Go + golangci-lint

# .github/workflows/ci.yml

name: CI

on:

pull_request:

push:

branches: [main]

jobs:

go:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- uses: actions/setup-go@v5

with:

go-version: "1.22"

- name: Install golangci-lint

uses: golangci/golangci-lint-action@v6

with:

version: v1.59.1

args: --timeout=5m

- name: Test

run: |

go test ./... -race -count=17) Parallel agent work without collisions:

git worktree

git worktree add ../wt-fix -b fix/search-timeout

git worktree add ../wt-feat -b feat/new-ranking

# Run separate agents in each directory safelyThis prevents two agents from stomping the same working directory, which is the fastest way to create unreviewable diffs.

Closing Thoughts

Autonomous code does not eliminate the need for developers. It eliminates the need for developers who only write code.

The engineers who remain indispensable are those who understand systems deeply enough to steer automation rather than compete with it. In that sense, software development is becoming less about typing and more about thinking—under pressure, at scale, and with leverage.

That is not the end of the profession. It is a compression of it toward its most valuable core.